Explainable AI using CBR Challenge 2023

challenge 223 schedule(NEW on 05-JULY-23 !) SCHEDULE and SUBMISSION GUIDELINE | CALL FOR PARTICIPATION | IMPORTANT DATES | TUTORIAL | PRIZES | COMMITTEE

challenge 223 schedule(NEW on 05-JULY-23 !) SCHEDULE and SUBMISSION GUIDELINE | CALL FOR PARTICIPATION | IMPORTANT DATES | TUTORIAL | PRIZES | COMMITTEE

EXTENDED Submission deadline (explainer) : 7th July 2023 Participants will have to Friday 7th July to submit their colab notebook with their explainer. iSee team needs some time to add the explainer into iSee library for you.

EXTENDED Submission deadline (report and iSee use case) : 17th July 2023. Participants will have the opportunity to complete their use case set up in the cockpit and perform some evaluation of the explanations using the conversational tool. There will be time to complete your report or online questionnaire and submit it by 1:00.

On the day in Aberdeen, you will have the opportunity to finish your task either on iSee cockpit (use case and evaluation) or completing the Questionnaire during the first slot (11:30-12:00). URL of the Cockpit (use your user account) : https://cockpit-dev.isee4xai.com/usecases

In the 3d slot (16:00-17:30) you will have the opportunity to share challenges and advice for enhancement or future applications (5-10 minutes talk) and receive your prize. Everyone will win a participation prize !

11:30 – 12:00 : Opening

12:00 – 13:00 : Contest + questionnaire (Lab room)

16:00 – 17:30 : summary of the session , submission talks, award ceremony, Q&A.

You are asked to provide answers to the questionnaire we have compiled. You have the choice of writing a short report and submitting it by email at hello@isee4xai.com. Or answering directly the online questionnaire at that link (online questionnaire). Your answers will contain details for the jury to understand the following points:

1) the explainer if you have proposed one, on which data type it is working, which technologies/model it is using and a brief description of the logic;

2) to which intent/goal the explanation is expected to answer (points that you would set up in the iSee Cockpit for instance);

3) how the application of your explainer (Explanation strategy) is performing on the chosen use case;

4) how/if you use the Q&A dialog manager to evaluate the explainer results.

Recently released AI Index Report 2023 – Artificial Intelligence Index (stanford.edu) has confirmed the increasing need for toolkits, frameworks, and platforms to evaluate AI model and their explicability level. To reach the stage of trusted AI, this has become clear that a multi-perspectives co-creation approach where end-user and designers are sharing the knowledge and experience gained. Evaluating Explanation methods of AI systems would then be in 2 steps: First, a technical evaluation by the ML expert to asses reliability and then subjective/qualitative feedback by the end-user. Within the iSee project, we advocate that past experience about using an explanation method on certain use case domains can be reused to generate a new explanation strategy (i.e. a combination of explanation methods). To support this we launch the iSee platform which allows entering use cases about the need for explaining an AI system, ingesting new explainers, assessing their reusability on the use cases and evaluating the application of explanation methods through personalised questions.

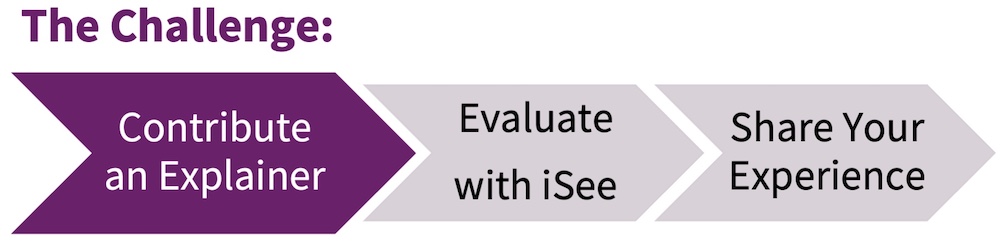

After its successful first edition at XCBR’22 we organize a second contest on explicability using the platform that we have launched in the iSee project. This year, the challenge focuses on the evaluation of explanation methods (new or existing) adaptability on use cases, and to that aim, the participants are advised to use iSee tools to :

iSee cockpit is our graphical tool to model a use case, user intents, check recommended explanation strategies for the case (which can include the newly added explainer or not) and enter the questions that will be asked by the iSee evaluation chatbot to guide the end-user evaluating the explanation experience. iSee team will propose all registered participants of the XCBR challenge follow an online tutorial (free).

At every step, participants will have the option to be coached by a iSee researcher as a mentor to help should they need that.

XCBR Challenge Call

In complement to iSee Tutorial ICCBR2023, The iSee team organises a dedicated tutorial to walk the participants through iSee tools. The tutorial will be a mix of practical activities (video and online demo) where the participants can interact with iSee industry users and researchers. The following topics will be explained :

You are encouraged to have a look at our HOW TO GUIDELINES as well.

Anne Liret, BT, France

Bruno Fleisch, BT France

Chamath Palihawadana, Robert Gordon University, UK

Marta Caro-Martinez, University Complutense of Madrid, Spain

Ikechukwu Nkisi-Orji, Robert Gordon University, UK

Jesus Miguel Darias-Goitia, University Complutense of Madrid, Spain

Preeja Pradeep, University College Cork, Ireland.

There will be a selection of prizes distributed to all participating teams, with an extra price for the top winning contribution, among the following