Update 19th June 2025 ! There is a new presentation of the iSee Experiments accounts. Please download the file using below link to use the most recent version of iSee (using LLM and CBR-RAG): iSee public june2025

There are increasing legal and social pressures for businesses to be able to explain the outcomes of complex systems. The iSee project was started in 2021 with the aim at recommending explanation strategies that suit the needs of users and evaluating their quality from human perspective rather than from machine side.

We are launching the iSee evaluation toolkit (chatbot-like) would like to ask your help in trialing the tool. This will help the project team assess how interactions improve human perception of explanations.

Read the readme file to get some context and access the sample data files that you can use when testing the chatbot. Then start the interactions with the chatbot (click on “evaluate use case). You can try it with multiple personas by clicking on the ”Restart” button on the top left corner.

Below we are giving you 2 cases to test. Register, then in case you want to evaluate the use case later, you can login back to iSee by using this link :https://cockpit-dev.isee4xai.com/user/login and log in with the same user/password that you entered to register at the invite link.

You will be asked to go through the complete set of interactions offered by the chatbot and evaluate if the computer/chatbot gives a meaningful answer to the query and, if not, which one, from your “human” perspective, you would imagine. We estimate it would take 10 minutes.

Radiograph Fracture Detection (radiology)

Context and objective of the AI model : As input, the AI model will receive radiographic images directly from medical imaging equipment (in this case, an x-ray machine). The goal of the AI system is to detect whether or not a fracture is present in the x-ray image. Many different types of fracture may be present in the data (i.e. different fracture types, different stages in healing/treatment process, etc), but the AI need only predict a binary classification of whether a fracture is present or not in the image.

Input: A radiographic image in PNG format.

Output: The probability of a fracture being detected (bounded between 0 and 1), value closer to 0 being low probability of fracture, values closer to 1 being high probability of fracture.

Explanation Strategies:

There are three explanation methods used in this strategy:

- A feature importance based explanation, which overlays the query image with a heatmap to indicate which pixels contributed to the AI model’s classification.

- An example-based explanation, which shows similar images to the query to explain why an image was considered to have a fracture or not.

- A performance-based explainer, which describes the performance and relevant metrics about the AI model.

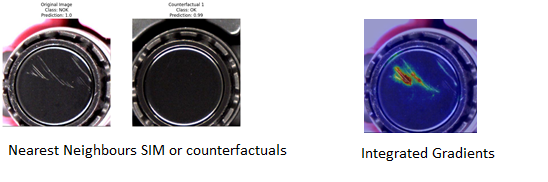

Sensor Anomaly Detection (image)

Context and objective of the AI model : As input the AI model receives high definition images of sensor components from a production line. The goal of the AI model is to detect defects in the manufactured components using computer vision techniques. The output of the AI model is a binary decision of whether to accept or reject a component based upon whether defects have been detected.

Input: An image of a component.

Output: Accept(OK) or Reject(NOK).

Explanation Strategies:

There are 5 explanation methods used in this strategy:

- A feature importance based explanation, which overlays the query image with a heatmap to indicate which pixels contributed to the AI model’s classification.

- An example-based explanation, which shows similar images to the query to explain why an image was considered to have a fracture or not.

- A performance-based explainer, which describes the performance using relevant metrics about the AI model (on a test

data).

data). - A performance-based explainer, which provides a classification report describing the accuracy, macro F1 score, Precision and Recall of the AI model (on a test dataset).

- A performance-based explainer, which provides a confusion matrix describing the correctly and incorrectly classified examples in each class (on a test dataset).

Example of Results

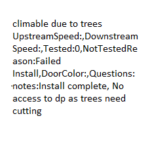

Telecom notes based decision about next planning task:

Objective :The AI system helps telecom engineers plan the different activities required for the installation of a network product on a customer premise, by providing recommended actions to perform their tasks. The recommendations are based on the notes provided by the network field engineers when they are sent to the customer site. These notes are processed through an AI model that suggests the next steps they should perform or why the installation did not complete. The model consists of the following two components:

- a TF-IDF vectorizer that converts engineer notes to a high dimensional TF-IDF representation.

- a KNN classifier that maps the TF-IDF converted documents to next step recommendations. 29 possible actions have been defined, represented in 29 different classes.

- The model has been trained with 5,000+ notes and associated next steps.

As input the AI model receives plain text telecommunications engineer notes describing a task performed on a piece of equipment. The goal of the AI system is to recommend one of ~30 scenarios.

Explanation Strategies:

There are three explanation methods used in this strategy:

- A confidence-based explanation, indicating the AI model’s assuredness in a recommendation.

- A feature importance based explanation, which highlights overlapping text between the query note and historical notes to justify the recommendation of a scenario. This can be viewed as a list of words, or summarized as a sentence.

- An additional information explanation, which highlights potential hazards identified in the task note as points of interest which may influence decision-making.