iSee: Intelligent Sharing of Explanation Experience by Users for Users

A right to obtain an explanation of the decision reached by a machine learning (ML) model is now an EU regulation. Different stakeholders (e.g. managers, developers, auditors, etc.) may have different background knowledge, competencies and goals, thus requiring different kinds of interpretations and explanations. Fortunately, there is a growing armoury of ways of interpreting ML models and explaining their predictions, recommendations and diagnoses. We will refer to these collectively as explanation strategies. As these explanation strategies mature, practitioners will gain experience that helps them know which strategies to deploy in different circumstances. What is lacking, and what we propose addressing, is the science and technology for capturing, sharing and re-using explanation strategies based on similar user experiences, along with a much-needed route to explainable AI (XAI) compliance. Our vision is to improve every user’s experience of AI, by harnessing experiences of best practice in XAI by users for users.

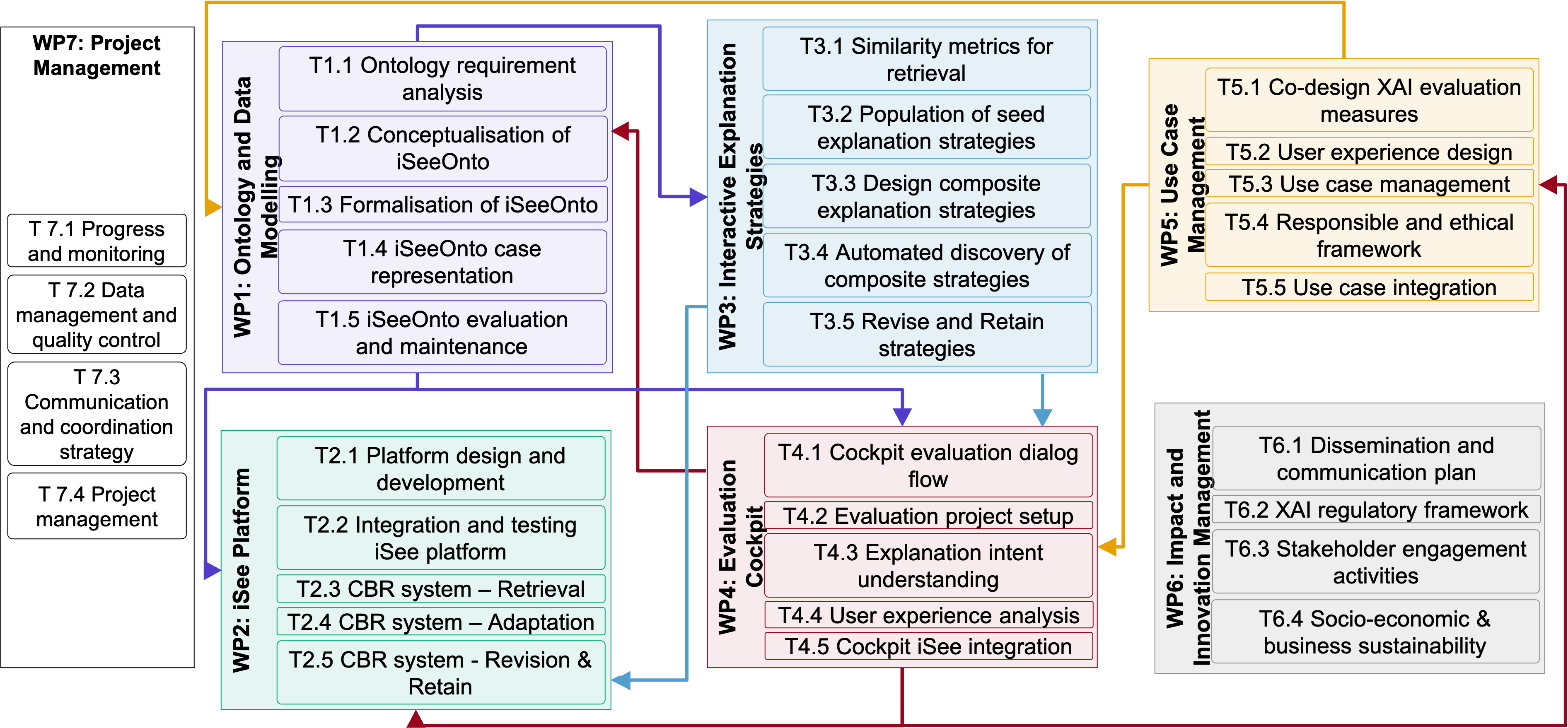

This WP develops the increasing versions of the iSee platform. Specifically defines the architecture, technologies, testing plans and external APIs; and integrate key platform components to achieve the fully functional CBR engine able to retrieve, reuse, revise and retain explanatory experiences.

This WP develops the increasing versions of the iSee platform. Specifically defines the architecture, technologies, testing plans and external APIs; and integrate key platform components to achieve the fully functional CBR engine able to retrieve, reuse, revise and retain explanatory experiences. In WP3, we will demonstrate the construction of explanations based on interactions with designer-users and, relatedly, the combination of previous explanation experiences to form new composite strategies. WP3 is central and interacts with all other WPs.

In WP3, we will demonstrate the construction of explanations based on interactions with designer-users and, relatedly, the combination of previous explanation experiences to form new composite strategies. WP3 is central and interacts with all other WPs. WP6 provides a participatory approach to integration of explainability into new and existing AI systems. We manage use case stakeholder activities (end-users, design users, framework contributors, policy makers); co-design iSee platform user experience and evaluation measures; validate acceptability and suitability measures; and define clear data ownership, ethics and interoperability issues of the iSee cockpit (WP4) with AI systems.

WP6 provides a participatory approach to integration of explainability into new and existing AI systems. We manage use case stakeholder activities (end-users, design users, framework contributors, policy makers); co-design iSee platform user experience and evaluation measures; validate acceptability and suitability measures; and define clear data ownership, ethics and interoperability issues of the iSee cockpit (WP4) with AI systems.